The OculusRift service has been updated to support the CV1 version of the Oculus. The service still is pretty rough and needs some polishing, but it's functional if nothing else.

The oculus rift service consists of 2 opencv services that collectively publish a pair of images, one from each camera. It also adds things like a transpose filter and an affine filter. The transpose filter is because the cameras in my InMoov head are rotated at 90 degrees. The idea is that this more naturally matches the resolution of the display in the oculus rift. So, I add either 1 or 3 transpose filteres depending if it's the left or the right eye.

These two images are assembled together to create a RiftFrame object. This contains one left eye image and one right eye image.

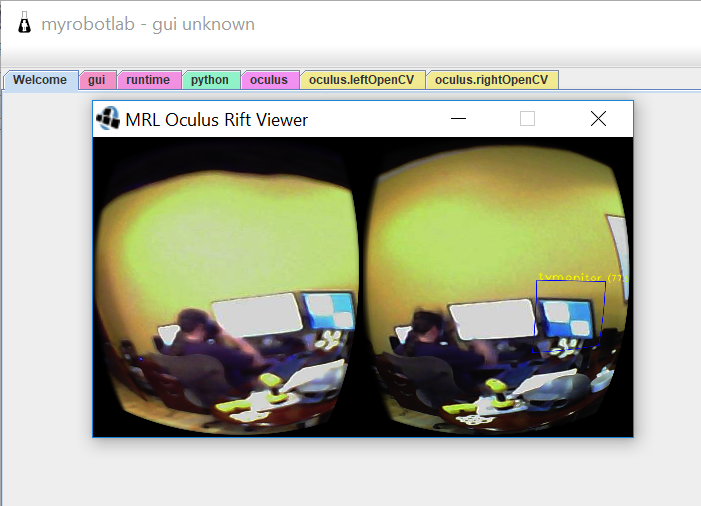

The OculusDisplay class is responsible for rendering that stereo pair on the rift display and mirroring it back your desktop display (as shown above)

There is also the OculusHeadTracking class that is constantly monitoring the users head orentation and position in space. This gives us roll, pitch, yaw, x, y and z coordinates for the head. These are published in the publishOrientation method and publishPoints methods (this should be cleaned up and refactored.. but for now, it 'tis what it 'tis.) This data can be used to direct the servos in the inmoov head & neck so as you look left, the inmoov head turns left, so you see out of the inmoov head to the left.

As an extra special bonus, I added the Yolo filter to the right eye, so as you're wearing the rift you are seeing realtime boxes with information show up around the various recognized objects in your field of view giving a start for a heads up display :)

If anyone out there has an oculus, I'd love to get your feedback and see if you could help test.

Other 3D Viewers.

I don't have an Oculus Rift setup (Its on my wish list) but I was wondering how hard it would be to add other 3D viewers to this service, such as the Google Cardboard.

I believe there is something similar for the IPhones as well, but don't know its name.

At this point I can't tell you much about the Google Cardboard other than:

1) It uses an android phone.

2) Its wireless.

3) It has accelerometers to report back the roll, pitch and yaw. May have X,Y and Z as well

4) Is a cheap entry level solution to the 3D viewer market.

Its just a thought.

Hello Ray iPhones actually

Hello Ray

iPhones actually have support for Google Cardboard as well, not sure how portable a Unity project would be though (Unity would be the best solution because of it's ease of use and the fact that Google's cardboard API was originally built specifically for it). There are several issues with porting the Oculus Rift interface to Cardboard, however.

First, from what I understand, this Rift service uses Rift-specific APIs. These would have to be switched out for RPC calls, and in order to add more viewers we would likely have to refactor the service to abstract everything. Second, one would need to have an MRL-compatible RPC server on the Unity side, written in either C# or JS (those are the two most supported scripting languages for Unity). There does not yet exist MRL client libraries for these two languages (MRL's webgui includes a JS library for communication, but it's designed for UI events. Not sure if it would function properly outside of the browser).

A workaround would be to embed an MRL instance and connect using the remote adapter service, but that requires starting a JVM inside of a .NET interpreter (using IKVM), which, in my experience, is incredibly slow. The best option is to build a C# client library for MRL and use that inside of Unity to implement the communication interface between MRL and the Cardboard device. Building such a client library wouldn't be too difficult, since C# is syntactically similar to Java. The only issue is time and effort. C# and the .NET runtime as a whole are seldom used for robotics applications, and as such, the potential reward for developing and maintaining such a client library is minimal compared to the effort needed. JS would make more sense to develop a library for since we already have the building blocks for it in the webgui. However, JS is not as well supported in Unity as C#, and from what I understand there are limitations to what it can do.

My advice: give it a shot using an embedded MRL instance inside of IKVM. It'll probably be slow and startup time quite long, but should be functional for some basic tests. Unless IKVM static compilation, which allows Java to talk to C# in-process, fails, then you'll have to build a small Java remote adapter-compatible application and compile with IKVM to a DLL and then connect to that from MRL. Then, if interest is there, we can develop a .NET or JS client library to replace the embedded MRL instance.

TL;DR:

It's definitely possible, but it's a very long road.

LWJGL / JMonkeyEngine3

Currently, from java, we do all of the rendering for the oculus based on the java bindings for OpenGL called "LWJGL" which stands for "light weight java game library" ... the JMonkeyEngine3 which powers the virtual inmoov rendering also uses this same library. For google cardboard, honestly, I think having some javascript that renders a stereo pair of images, and let you open it with google cardboard. We should be able to wire something like this into the WebGui ... That's the easiest / best path forward from my perspective.

We could probably use something like Three.js to render the stereo pair for us. I am pretty sure this is the same library that was used in the very very crude UI for the InverseKinematics3D.