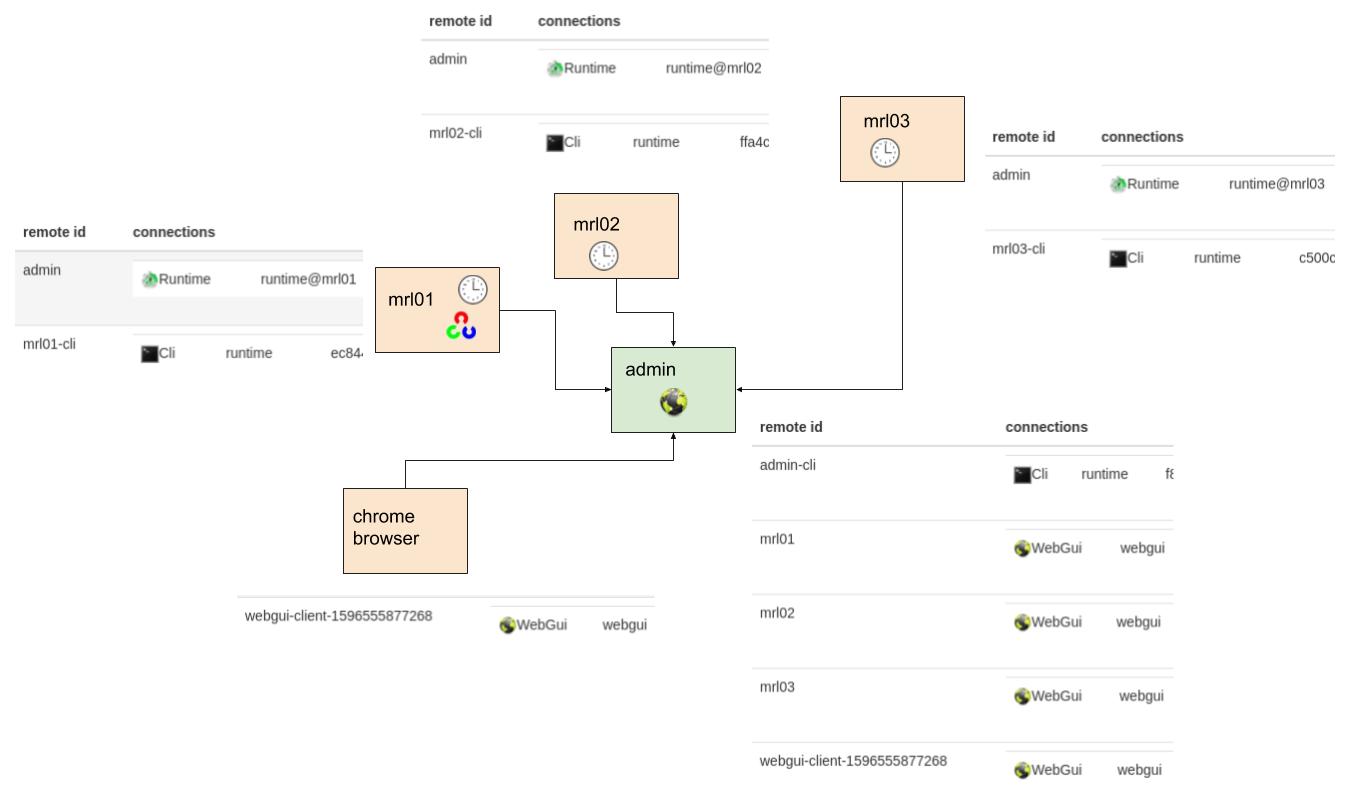

Here is the distributed mrl instances I currently have working locally. Its currently a star topology with 3 mrl instances and a web-browser client. The UI now has complete interactivity with all services on all instances. I started testing with Mrl instances having 13 clocks each, making sure I could start a clock service, and from the UI be able to start and stop the clock (control messages), while seeing the clock pulses update the UI.

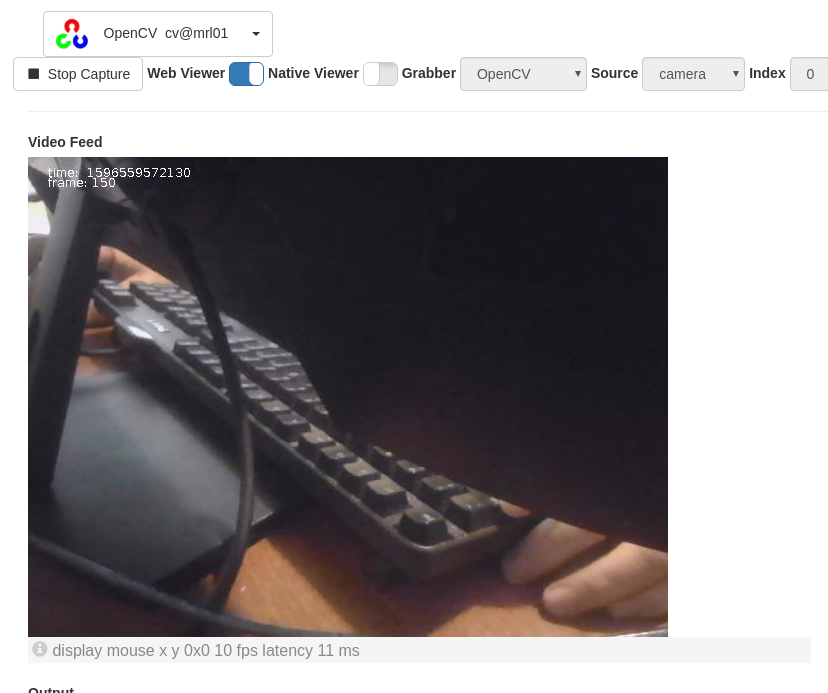

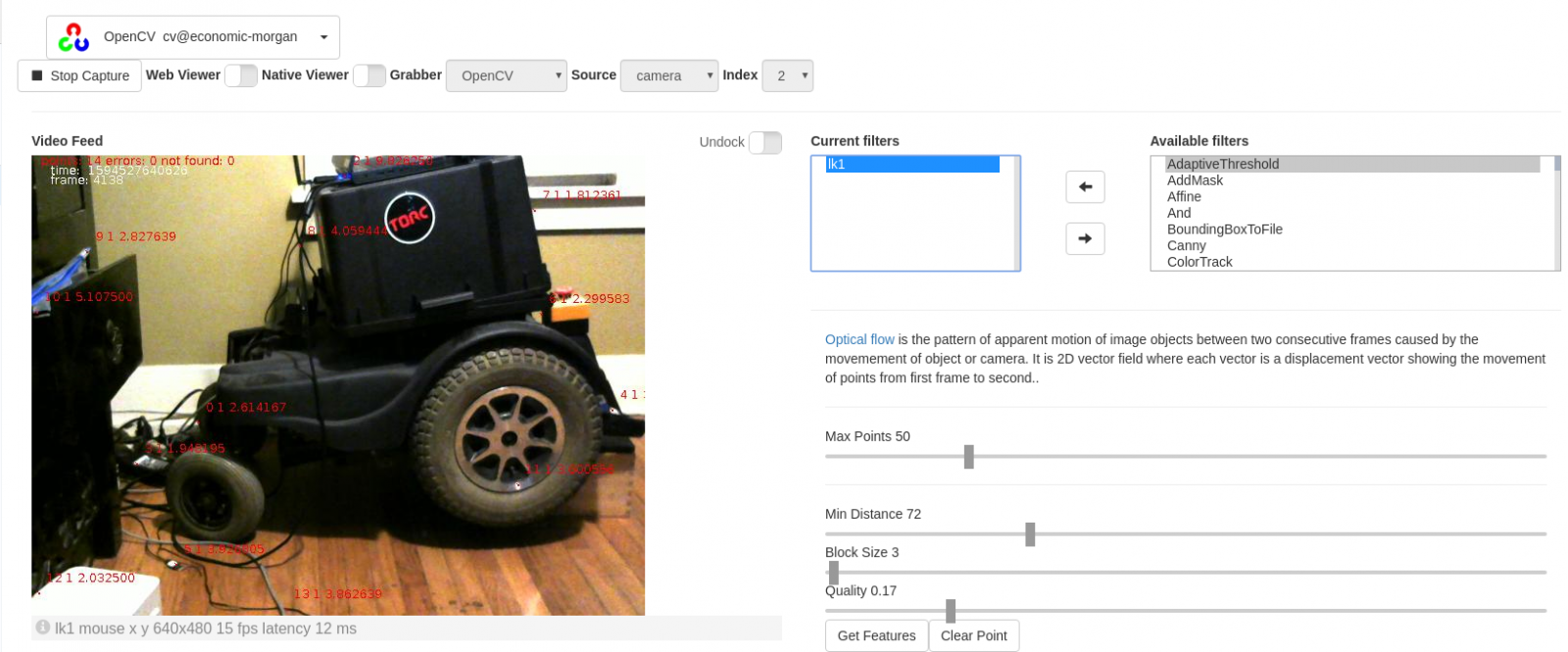

If you look at the paths, you'll notice that clock pulses need to be relayed or routed from the instances "through" the admin, then too the browser. As a challenge I started opencv on mrl01 and started a capture. As you can see below ... WORKY ! .. it worked without errors, without python scripts, or special handling, I have a video feed routed from mrl01 "through" the admin to the webgui.

I'm sure there are bugs to be squashed, but initial results from my work are encouraging. I am curious how more instances in more complex topologies will work together. For example, if mrl01 started a webgui service, then another mrl instance could attach to it. Would all of its service information propegate through all of the instances?

More Tests !

Video feed from cv@mrl01 routed through another mrl instances to show on the web UI

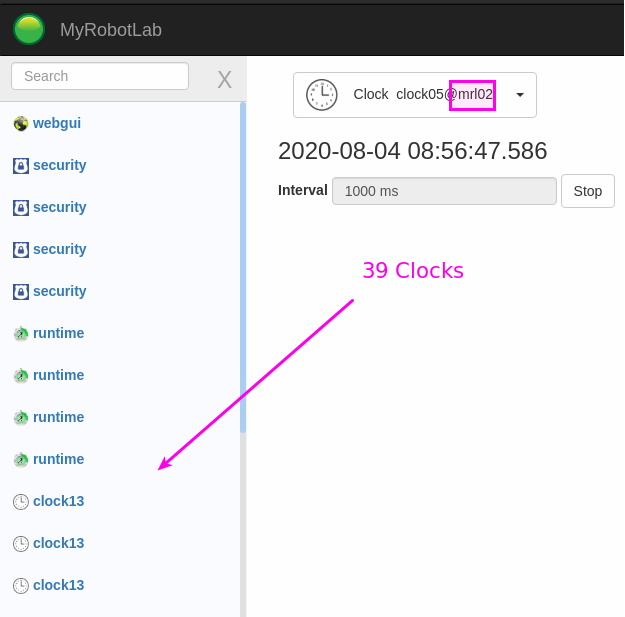

4 instances, 4 security services, 1 webgui, 1 browser and 39 clocks ... all worky !

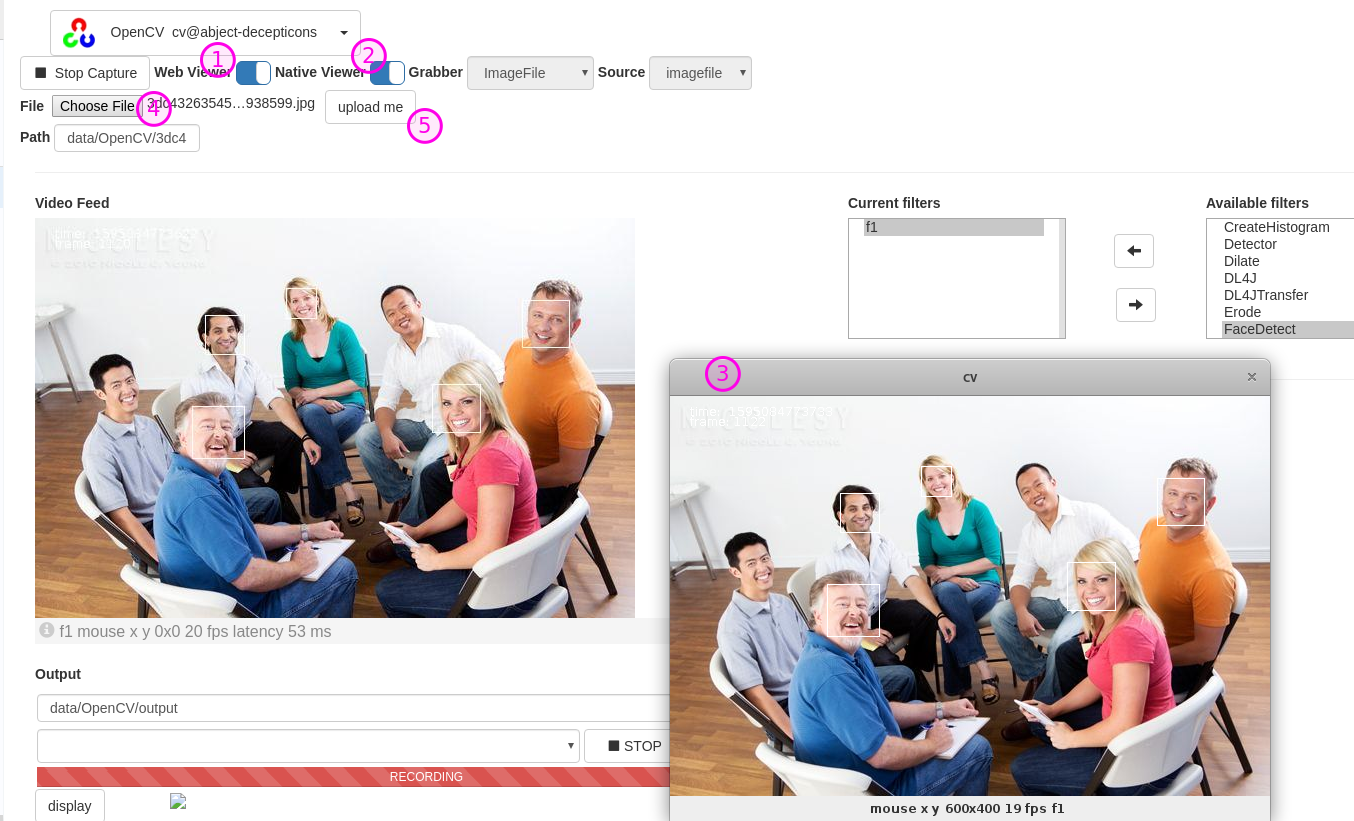

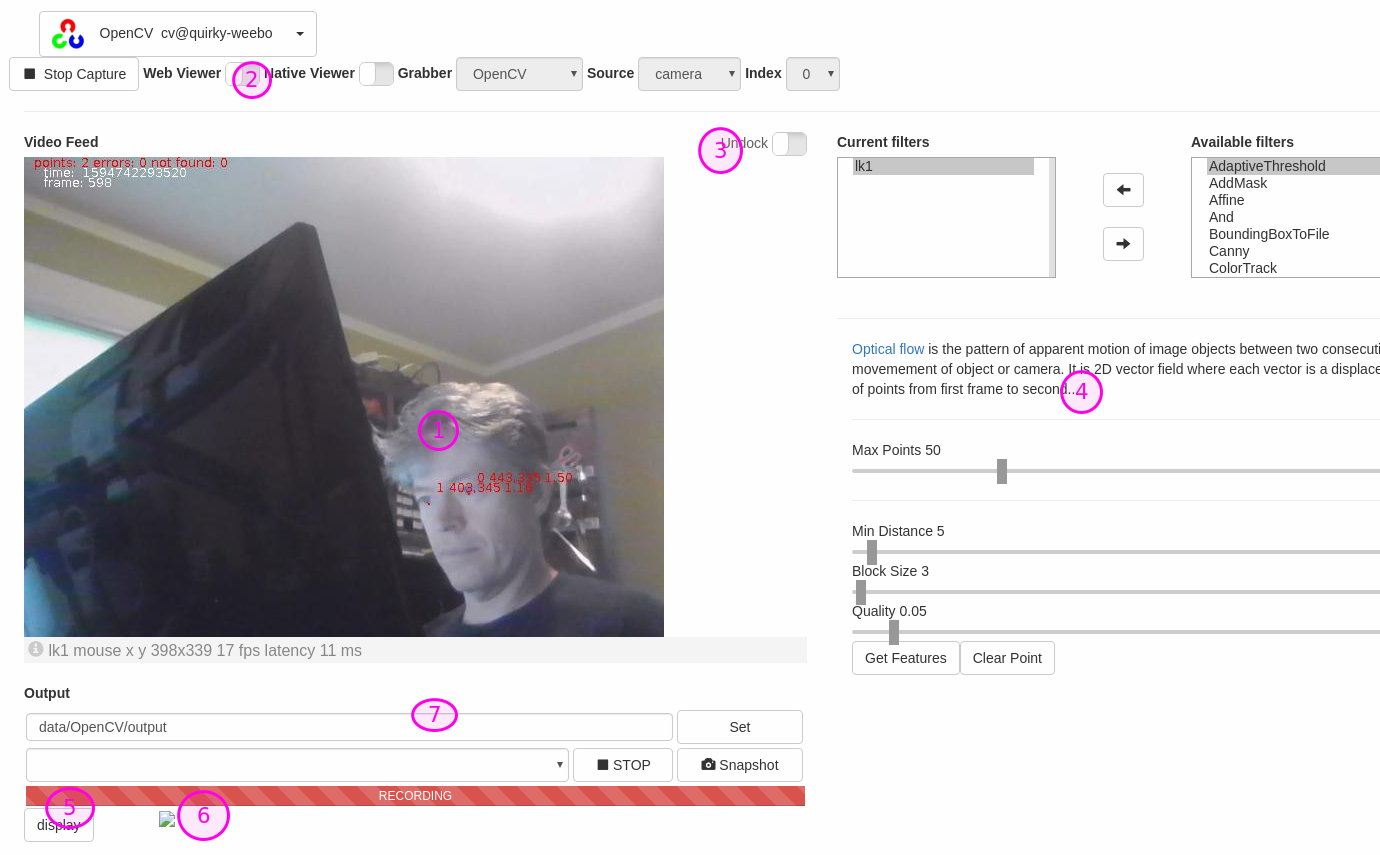

More progress with OpenCV webgui.

1) Web Viewer now enables and disables web viewer stream and publishing

2 & 3) Native Viewer starts and stops a Swing VideoWidget2 ... I wanted to use javacv's CanvasFrame but found it unreliable for displaying - often it would come up blank until you resized it ... i tried a variety of swing strategies to get it to work properly .. pack() invalidate() but it still was blank 50% of the time. Anyway VideoWidget2 has a nice feature of being able to trap mouse clicks in the stream (same as web viewer now)

3) I added the extra fields necessary to support the ImageFile Grabber . (4) It's a little big klunky, but you can choose a file and upload it to the mrl server to process. Additionally if you put in a URL in Path - it will fetch it and put it in the data directory then load and process it. You've got to hit the (5) upload button for the file to be uploaded - perhaps it should just be the event of selecting the file.

TODO - need to wrap up/make worky all the output controls. Then I think its just implementing the filter dialogs that are most interesting... that's my plan.

I'll merge this with webgui_work and cut a pr shortly..

Progress !

Update 20200714

There was a threading issue relating to a thread from the webgui partially updating a filter's state while the video processor thread processes the display. I fixed that simply and robustly by having the video processing thread do the update. Previously NPEs were being thrown, now - rock solid.

I'm not sure how many ones I want to create (or re-create), but it would be very helpful if Astro thought about a good uniform design. I guess its not too bad with some description what the filter does at the top, and the various controls below. Its a start anyway.

Update 20200711

One of my favorite filters the LKOpticalTrack's Dialog is mostly functional with the major components.

I added a link in the description (bonus feature to have nice html markup to describe each dialog)

I still need to add the capability of setting a point with the mouse in the viewer. Was hoping maybe Astro could wire up a good mouse event.

So far I've done 5 filter dialogs, this was the first one which requires the setting of points using the mouse and (x,y) location of a click in the video stream view.

Now able to create masks with pngs ... I will add an easy interface to input a png with transparencies. Could possibly help with detection training.

Fun with inexpensive Fish Eye Lens !

.png)

Good stats (15 fps is what the camera does - 7ms latency ... latency also includes all the filter processing in addition to the network travel to the browser)

Update: Lots of worky stuffs !

I implemented a new way of video streaming to the webgui.

Its faster, does not require another service (like the VideoStreamer), does not require a different port (operates over the same ws connection as the rest of the gui), contains more meta information - such as latency and other arbitrary details, and uses less resources.

I got the grabber selection, camera index, grabber type and lots of other parts working.

Currently I'm working on the filter sub-dialogs and trying to find a way so that it will be easy to add the rest.

Hi Astro,

I was going to start making the UI parts of OpenCV you created actually work.

I thought I'd add a few toggle buttons for toggling on native and web screens, but when I added a <toggle and tried all the various classes all I got was this :

.png)

It looks like the toggle ui libraries' original css has been modified ?

Would it be better to leave the original css alone - and add the overriding style at the top of the service page, or if it is to be shared among multiple servicess a new css file ?

I guess I'll use miniBTN because it looks to be the only thing that will work.

What do you think?

Hi GroG, I think it can be a

Hi GroG,

I think it can be a cascade effect if it is inside another style or id.

The first line has this style

<div class="OpenCVStyle preventDragSelect">

I think I am overwriting toogle when it is inside OpenCVStyle

It happens to me all the time, I mofify something of bootstrap. Then when I see that I modified something that is not inside mrl.css with git status, I do a git restore to keep that original. But it could be that I have modified something at the beginning when I started using git without knowing it. I don't think I did, but I don't remember. You can overwrite the libraries with the original ones just in case and if something looks different I can fix it.

You can put any toogle you want and I can fix it later, but I'm almost sure it's a cascade effect of other style.

You can try adding it first of all to see if it changes.

GroG, I found it. You can

GroG,

I found it. You can comment line 1772 in mrl.css

.OpenCVStyle .btn{...

It was not a good idea. Later I will correct the buttons that used that.

Thanks for all the

Thanks for all the information Astro.

I verified as you mentioned this was the problem

So I commented it out.

I'll look into the original css files later, but I don't want to do anything drastic and my css knowledge is not as extensive as yours.

Time to make this OpenCV worky !

Mouse coordinates

Hi GroG!

I think this can work.

https://www.w3schools.com/jsref/tryit.asp?filename=tryjsref_event_mouse_clientxy

Got it ! Perfect ! Thanks !

Got it ! Perfect !

Thanks !

Hi GorG, (4)...but it would

Hi GorG,

(4)...but it would be very helpful if Astro thought about a good uniform design...

Good. I will start doing something with it.

(5 and 6) I don't know what it's for either. That was already in the original html and I left it at the end because I did not understand what they were for. I guess you can delete them.

I'll merged my changes into

I'll merged my changes into webgui_work...

(it should have my latest changes) - big amount of updates.

I think I'm missing a step

I think I'm missing a step again.

make_web_dev.bat

install in clean dir c:\dev

F12

Delete resource dir

git checkout "webgui_work"

git pull

"Already up to date."

F5

But the "OpenCV" grabber does not appear.

I press Start Capture and errors appear.

A directory appears that I don't have "c: \ projects ......"

[ WARN:2] global C:\projects\javacpp-presets\opencv\cppbuild\windows-x86_64\opencv-4.3.0\modules\videoio\src\cap_msmf.cpp (681) CvCapture_MSMF::initStream Failed to set mediaType (stream 0, (640x480 @ 30) MFVideoFormat_RGB24(unsuppo

rted media type)

Hi Astro, You did everything

Hi Astro,

You did everything correctly ...

But you need this jar

http://build.myrobotlab.org:8080/job/myrobotlab/view/change-requests/job/PR-761/2/artifact/target/

replace the one you currently have with it.

Yes, but no :( I can see the

Yes, but no :(

I can see the FrameGraber options now.

But now I remember that I can't use OpenCV because I use the RaspberryPi camera with UV4L

So I'm going to have to wait for the link option to be available because I access the camera like this

http://192.168.1.109:8080/stream

Format MJPEG Video (streamable).

But although I can't see the camera, I can see the filters when I add them below. I'm going to see if I can shape the design of that.

mjpeg confirmed worky - take

mjpeg confirmed worky - take a look above .. Path you put your url in, make sure MJpeg grabber - hit capture ... hopefully worky for you too ;)

I tried with your

I tried with your example

http://bob.novartisinfo.com/axis-cgi/mjpg/video.cgi

and it works, but not with my url, I think it is a UV4L format problem.

I sent a noworky.

I did a port forwarding if you want to test my camera

http://181.170.68.84/stream

This is the control panel http://181.170.68.84/panel

UPDATE WORKY!!!

In server info of UV4L I found the answer

"raw HTTP/HTTPS video streams in MJPEG, JPEG (continuous stills) and H264 formats - if supported - are available under the /stream/video.mjpeg, /stream/video.jpeg, and /stream/video.h264 URL paths respectively"

I tried this and it worked :)

http://181.170.68.84/stream/video.mjpeg

Cool, glad it worked - I

Cool, glad it worked - I tried it with your stream and got worky too.

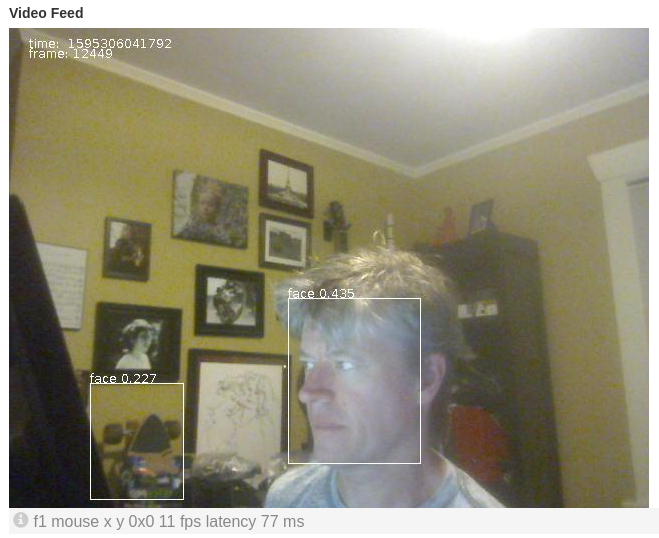

Tried DL4J - and it thinks you live in a cinema .... nice ..

Very cool panaramic lens - what kind is it? (The DL4J filter had a serialization error .. i fixed it locally and will push the fix soon)..

We're making lots of progress ...

it thinks you live in a

it thinks you live in a cinema

Cool. :)

Here is the camera:

http://myrobotlab.org/content/pi-camera-streaming-uv4l-pc-mrl

Now I only have 7 fps and if I activate faceDetect it freezes.

In 2017 it reached 33 fps with the faceDetect activated.

Old screen capture:

Is the browser your using to

Is the browser your using to look at this through the webgui on the raspi ?

If so - you don't want to do this - try it from a laptop's browser

For me I don't get more than 12 fps from your camera - regardless if face detect is running.

That's because there is over 256 hops from my laptop to your T-800's eye

It's like your half a world away....

LAN speeds should be better.

Kwatter mentioned gzip compression too - which would help with network speed, additionally a bigger improvement could be done with H.262 - but I'd recommend checking LAN speed from a different puter first.

Testing

Hi Grog. Thanks for the reply.

additionally a bigger improvement could be done with H.262

UV4L has H264 (raw, streamable) option.

Raspi only has raspian strech, no GUI running, it only starts with UV4L for streaming via ethernet cable to a PC with MRL.

I changed the ethernet cable and it improved a bit.

I did some tests in case some data helps you understand what happens:

Whenever I download a new version I comment the lines 451 and 874 in mrl.js to prevent chrome from crash with bazillions errors.

In UV4L setting the camera to more than 30 FPS and I can reach 25 in MRL at most but many frames skipped.

I tried Chrome, Firefox and Brave.

Camera 30 FPS - Chrome 17 FPS (skipped frames)

Camera 30 FPS - Firefox 25 FPS (skipped frames)

In this video you can see something strange.

https://www.youtube.com/watch?v=8wk-GRltOwI

I start with 30 FPS on the camera and skipped a lot of frames and it shows about 20 or 18.

I go up to 40 and it reaches 22 FPS skipped more frames.

I drop it to 20 and it looks stable, skipped 1 per second.

I lower it to 12 and it's totally stable. I can go up to 20 and it's still stable too.

At 25 starts skipping constantly.

I apply filters but sliders don't work for me.

I apply FaceDetect and an error "getting grabber failed" appears:

LoopGroup-3-6] INFO class org.myrobotlab.service.WebGui - invoking local msg runtime@webgui-client-1234-5678.sendTo --> op@guiltless-r2d2.addFilter("face","FaceDetect") - 1595287828472

-processor-1] INFO c.m.opencv.OpenCVFilterFaceDetect - Starting new classifier haarcascade_frontalface_alt2.xml

-processor-1] ERROR class org.myrobotlab.service.OpenCV - getting grabber failed

tion: OpenCV(4.3.0) C:\projects\javacpp-presets\opencv\cppbuild\windows-x86_64\opencv-4.3.0\modules\objdetect\src\cascadedetect.cpp:1689: error: (-215:Assertion failed) !empty() in function 'cv::CascadeClassifi

o.opencv.opencv_objdetect.CascadeClassifier.detectMultiScale(Native Method) ~[opencv-4.3.0-1.5.3.jar:4.3.0-1.5.3]

lab.opencv.OpenCVFilterFaceDetect.process(OpenCVFilterFaceDetect.java:215) ~[myrobotlab-1.1.2.jar:1.1.2]

lab.service.OpenCV.processVideo(OpenCV.java:1289) ~[myrobotlab-1.1.2.jar:1.1.2]

lab.service.OpenCV.access$100(OpenCV.java:139) ~[myrobotlab-1.1.2.jar:1.1.2]

lab.service.OpenCV$VideoProcessor.run(OpenCV.java:199) ~[myrobotlab-1.1.2.jar:1.1.2]

hread.run(Unknown Source) [na:1.8.0_241]

-processor-1] INFO c.m.opencv.MJpegFrameGrabber - Framegrabber release called

-processor-1] INFO class org.myrobotlab.service.OpenCV - run - stopped capture

x_0] ERROR class org.myrobotlab.service.WebGui - WebGui.sendRemote threw

...

5:44 "Stop Capture" doesn't work, I have to remove the filter to stop it.

And now being 20 FPS there is a lot of skipped. I drop it to 12 and continue with a lot of skipped. I have to go up to 20, go down to 12 and go back up to 20 for it to stabilize.

Here you can see a test with an old version of MRL that I did now. The video is long, but you can jump to 1:15 where you can see the speed of the FaceDetect by detecting Sarah Connor's face with a lot of speed.

https://www.youtube.com/watch?v=bJIGp7zVfsA

Perhaps it is my impression from the speed with which swingui updates the FPS value that is difficult to read but seems high, and perhaps when FaceDetect works on my Nixie it can look the same.

Your reports are very helpful

Your reports are very helpful Astro.

FaceDetect failed, because there is some unserializable part ... I'll look into it.

Which sliders do not work ?

I have some raspi's I'll keep testing with too.

I'm interested in testing jpeg lossy encoding ratio and zipping to see how much improvement that offers.

I did some experimentation -

I did some experimentation - found that

usb-camera --> raspi --> mjpg_streamer --> wifi --> laptop --> myrobotlab/OpenCV MJpeg grabber --> webgui

has so far worked the best. It's about ~14 fps coming off the the raspi into a browser, and nearly the same going to myrobotlab running opencv's mjpeg frame grabber - then being displayed on a local browser. Adding filters didn't have much impact, and they worked - I ran "Flip" & "FaceDetect"

FaceDetect seems pretty sesitive to being forward.

FaceDetectDNN is more robust - and will detect at greater angles

Not much difference is speed .. although facedetectdnn on average I would say is more robust

Although sometimes it thinks my long board is a face 23% .. Interesting it never picks the photos...

I found where FaceDetectDNN crashes - I'll push a fix soon.

There is a way to lower the jpg quality going to the webgui from mrl too - but I have not experimented with that

Great GorG. Thanks for your

Great GorG.

Thanks for your tests.

Later I will continue experimenting. Great progress!

Hi GroG, I corrected the

Hi GroG,

I corrected the slider style, now instead of custom-slider3 you can use custom-slider in OpenCV

class="custom-slider"

Pushed in webgui_work

Nice Astro ! ... thanks...

Nice Astro ! ... thanks...

Next for me is the record part ...

(No subject)

(No subject)

(No subject)

Not sure what they wanted in

Not sure what they wanted in the shout box ...

but I tested the following commands and it works in Nixie ..

to start a single instance on one computer do this

it should start a webgui on port http://localhost:8888

on a different computer you can do the following -c means "connect" and if your computer has an ip address of 192.168.0.30 where you ran the first command, you could do this on a different computer or raspi - and they should connect.

You should be able to see services from both on http://192.168.0.30:8888 if no firewalls are blocking traffic

I can see a few bugs in this connectivity (its over websockets)...

I'm currently working on the same type of connectivity over mqtt

Its getting very close .. but still needs some work too