This is a quick tutorial on one way to isolate an object in a video stream. Once an object is isolated in the view, the computer can attempt to identify it, or locate it relative to some other mechanical extension, like grippers for example. Using color to isolate objects is just one of many different methods. Edge detection is another method which can be used. In fact, these different processes can be combined together to derive more information from the video stream.

Hue, Saturation and Value (HSV) is another way to measure color. In most video streams the color of each pixel is divided into Red, Blue, and Green values. As light changes in the view, these values change considerably. It was shocking to myself to see how much the values changed from one video frame to another ! Webcams are great sensors, but the data they give on a digital level is very noisy. In order to limit some of this noise, or really organize it in a different way, the image is converted to Hue, Saturation and Value. In simple form, Hue represents "what color", Saturation represents "how much color", and value represents "how bright". In this way looking for color objects is simplified such that looking for a particular color, means you are just looking for a specific value or range of Hue.

In myrobotlab you will need a compatible webcam and the OpenCV service, I usually name mine "camera", you can choose a name you prefer.

Add the filter InRange - this begins by converting your image to HSV.

In this demonstration I will be isolating the pink ball.

Highlight the newly named filter and dialog controls will appear.

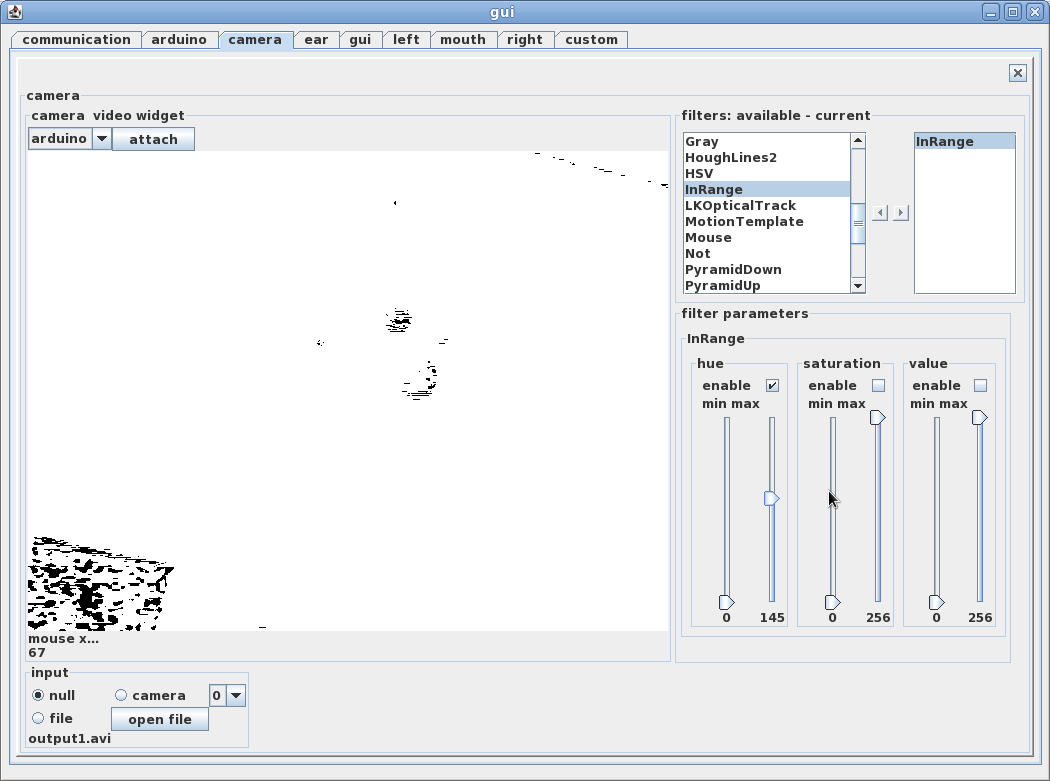

First we will enable the Hue (color) filter. When it is first enabled a white screen should appear. This is because the default settings for Hue are set to allow everything through, and the results is a white mask which represents matching pixels.

I will now lower the max hue until the object I am interested in turns black. This means the max value of hue is below what it needs to be for the pink ball.

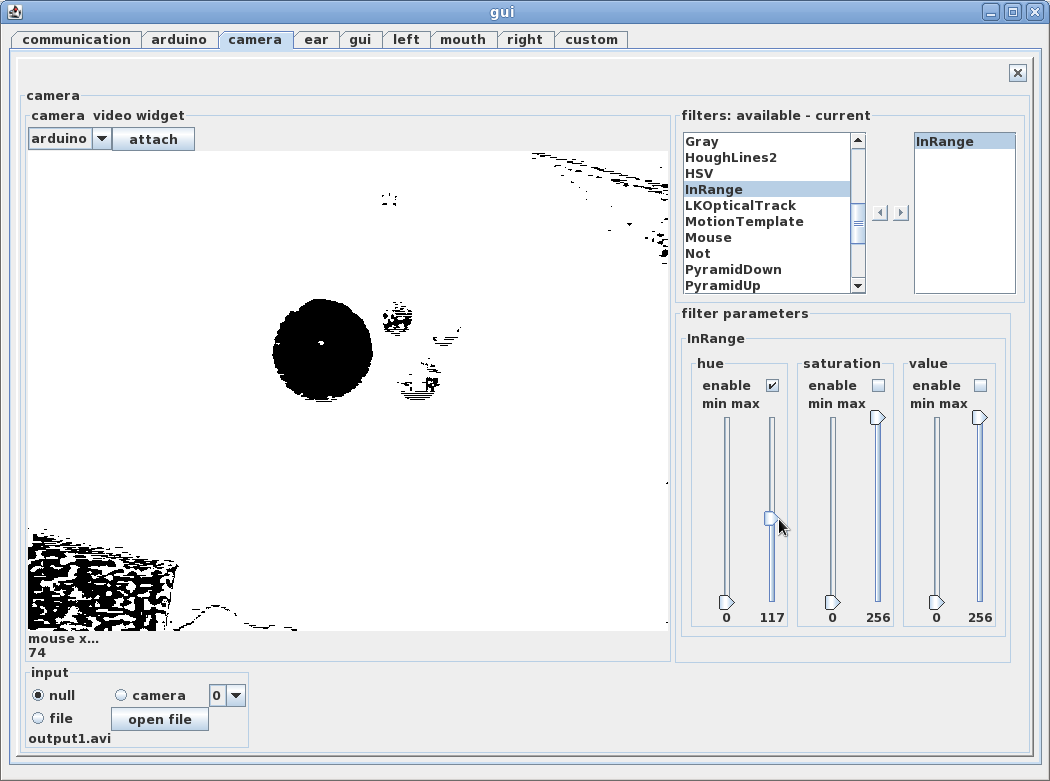

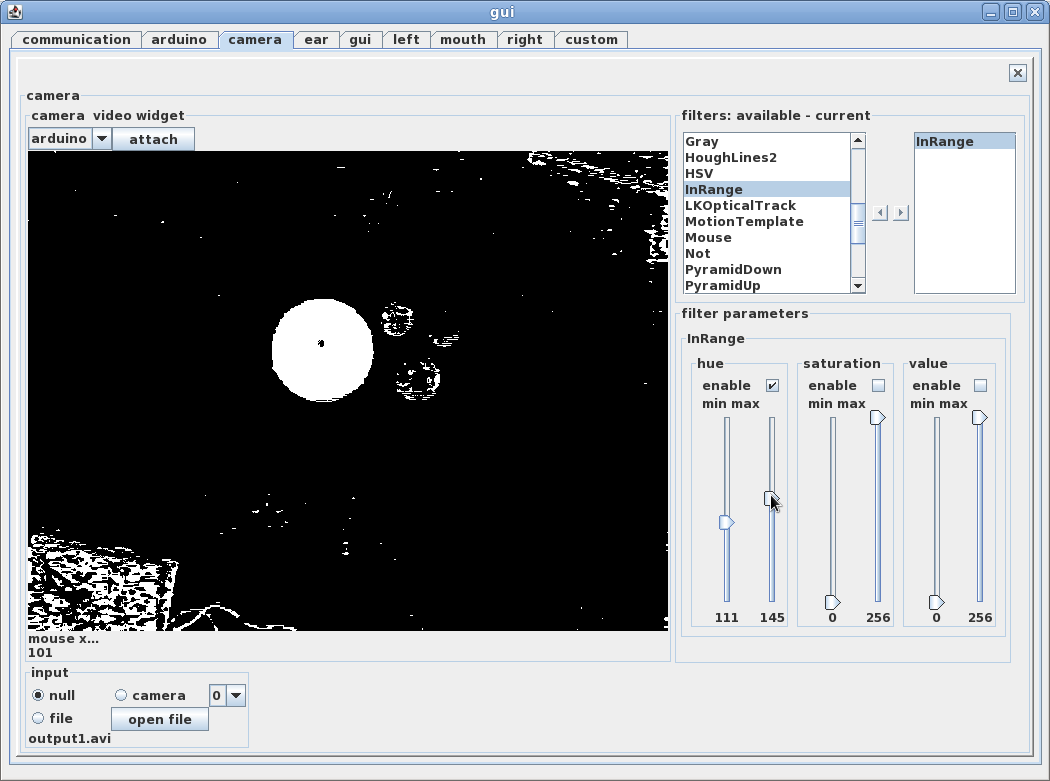

I'll now move the slider back up to allow "pink" to go through the filter.

Now, I'll do the same thing with the min hue control, in an attempt to find the minimum and maximum "pink" color boundary. As you can see there is little bits of "pink" in a lot of other objects.

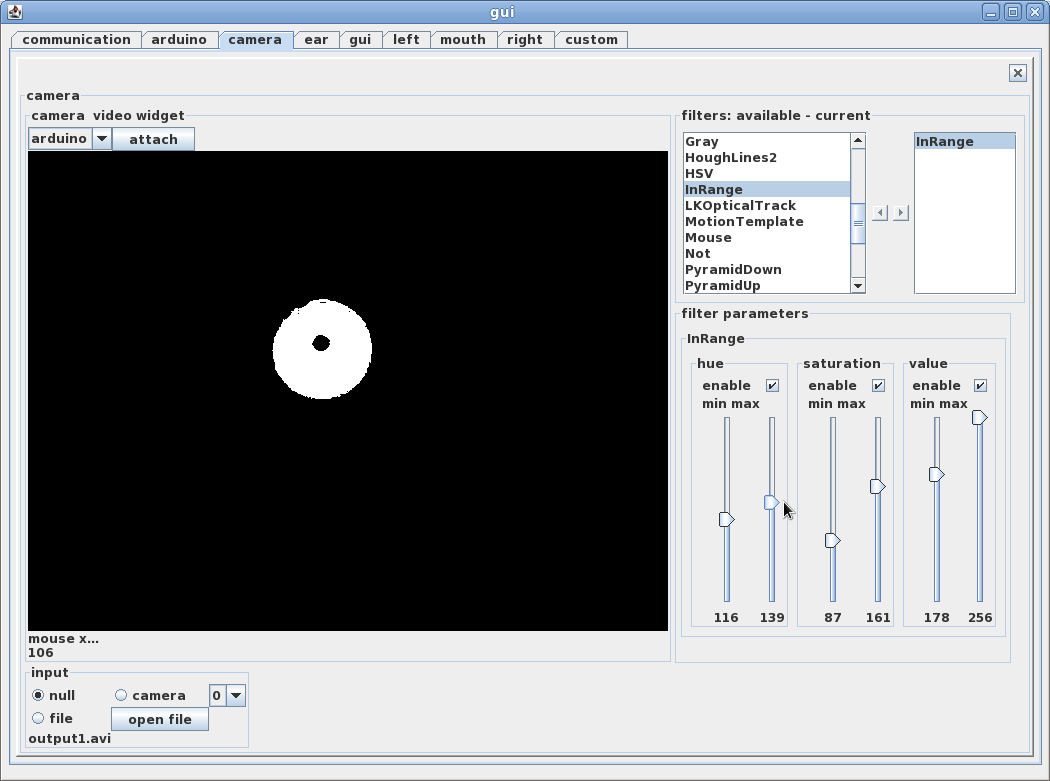

We will use the other 2 filters to narrow and isolate the object of interest. At this point the object is pretty "clean", there are a few speckles which flash on and off in the video stream, but not too bad. You can see the highlight on the ball as a black spot since it does not come near our current values of "pink"

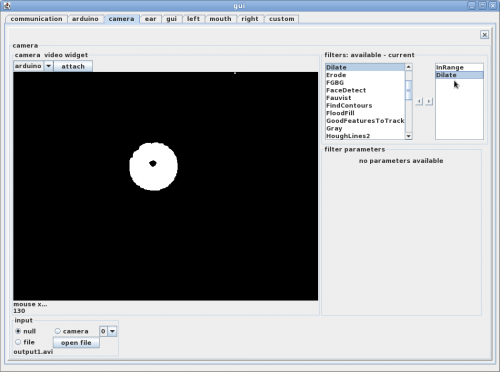

I usually like to add a Dilate filter to smooth out the edges and remove any black speckles from the ball.

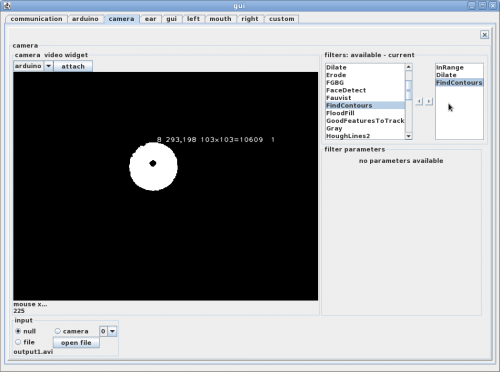

Now, would be the time to add a FindContours filter. FindContours is a Blob Detector . It computationally will group pixels together and define polygons. It would be useful to find the relative location of the pink ball, its center, and size, even the number of edges. However, this filter is computationally expensive. It becomes very expensive depending on how many blobs are in view. The more blobs the more time it will take to find them, so it's best to have a very clean and simple image before sending it to FindContours. It this case it took 225 milliseconds for all filters including FindContours to process.

We can see now the Pink Ball is an 8 sided polygon (octagon), it's center is x 293, y 198, it's bounding box is 103 x 103 pixels giving a rough area of 10609 pixels

With configuration the polygons can be sent to some other Services, such as motors, servos or something else, which in turn might make a robot roll towards or attempt to grasp the pretty pink ball.

GO GET THAT BALL!